Dynamic Performer System + video synthesis

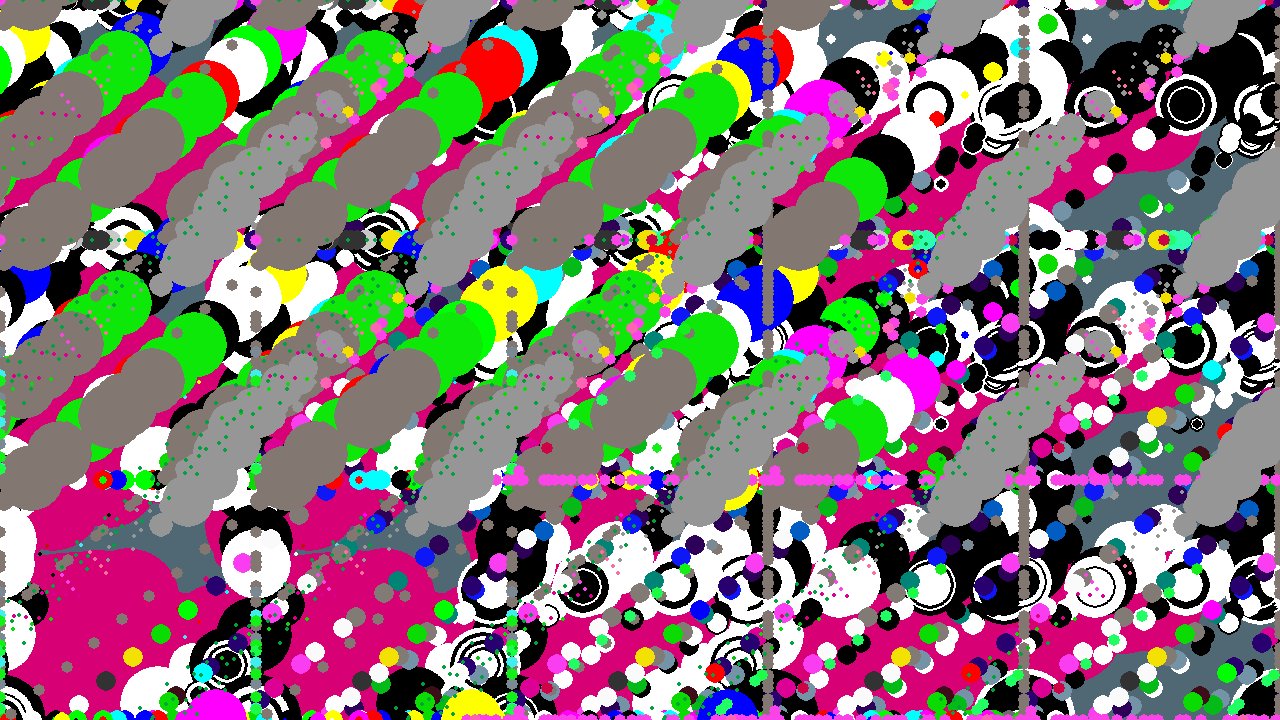

The performer improvises musical sequences in sync with visual expressions.

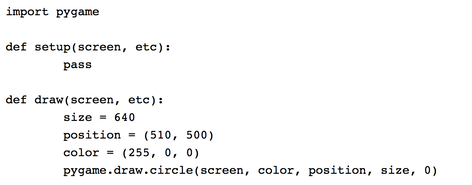

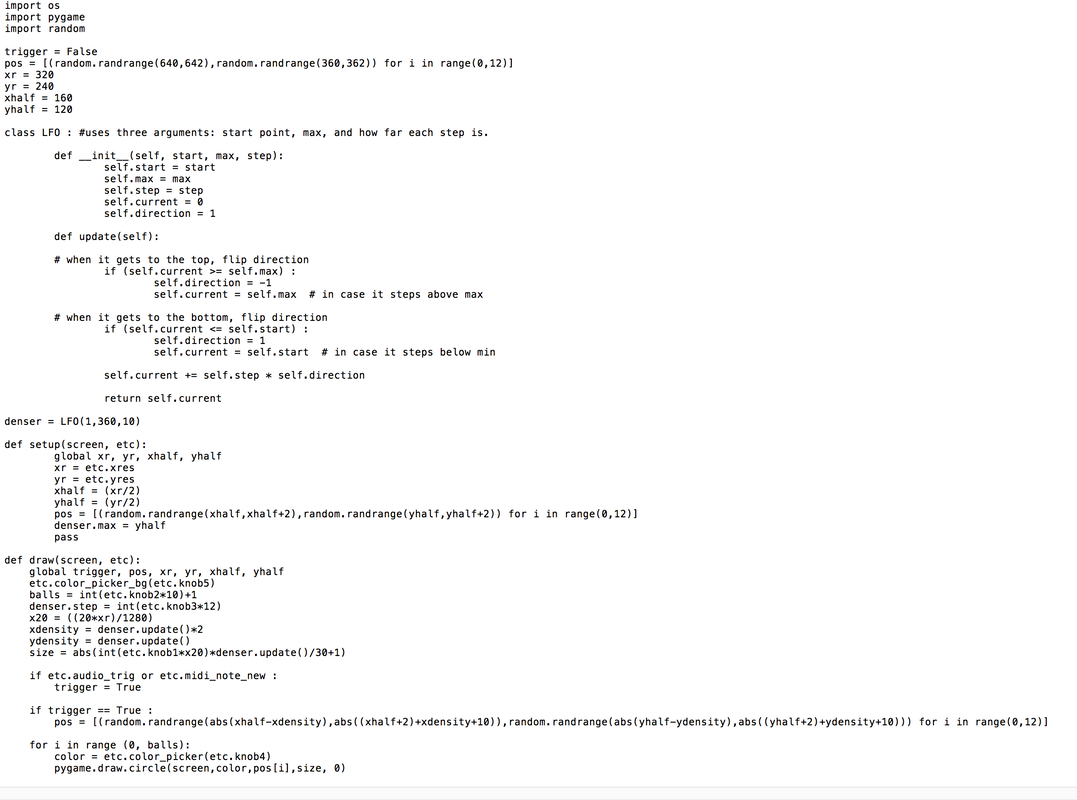

The video synthesizer runs on Python.

Here I am using Hardware + Software, XY-pads, potentiometers/encoders, and 3-axis motion sensors to extend expressive gestures.

DPS ver. 1.5 combines: ETC by Critter&Guitari (video synth), Roland TR-08 (master clock + midi controller), Teenage Engineering OP-1 (3-axis motion sensor), Ableton Live (audio loop sync), Max/MSP (XY pad)

The performer improvises musical sequences in sync with visual expressions.

The video synthesizer runs on Python.

Here I am using Hardware + Software, XY-pads, potentiometers/encoders, and 3-axis motion sensors to extend expressive gestures.

DPS ver. 1.5 combines: ETC by Critter&Guitari (video synth), Roland TR-08 (master clock + midi controller), Teenage Engineering OP-1 (3-axis motion sensor), Ableton Live (audio loop sync), Max/MSP (XY pad)

Visual art with Python source code

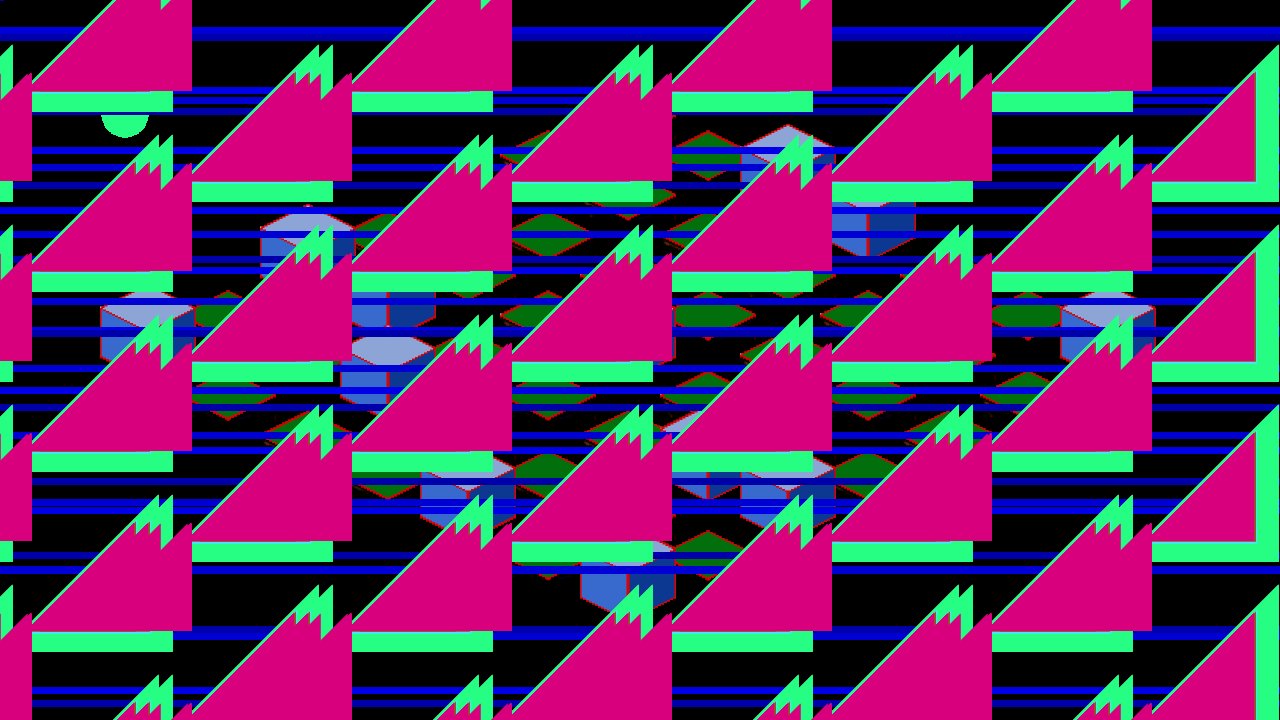

Live Coding

I am experimenting with live coding using Hydra, created by artist-coder-thinker Olivia Jack.

Hydra can also load in external libraries, which opens up the possibility of adding features like synthesis (with Tone.JS) and 3D (with THREE.js) in JavaScript.

What really excites me is that it makes code, normally a solitary activity, strangely social – both with “jam”-style collaborative coding and just the ability to share snippets and ideas with others.

Hydra can also load in external libraries, which opens up the possibility of adding features like synthesis (with Tone.JS) and 3D (with THREE.js) in JavaScript.

What really excites me is that it makes code, normally a solitary activity, strangely social – both with “jam”-style collaborative coding and just the ability to share snippets and ideas with others.